Summary

This study analyses how large language and reasoning models handle tasks requiring different cognitive skills by examining internal activations and output behaviour. We explore when different forms of reasoning (e.g., planning, arithmetic) emerge and if such capabilities can be clustered by their underlying cognitive, memory retrieval, and linguistic demands.

Application Details

This Research Associate position in LLM Reasoning is one of four roles being advertised under the same University of Bath job reference (AP12866). The official job portal lists the opening under the broader title of “Visual Computing”.

To ensure your application is considered for the correct role, please follow these steps:

- Use the official “Apply Online” link found on the main university job page.

- In your cover letter or supporting statement, please state that you are applying for the project titled: “Exploring the capabilities of state-of-the-art large language models and Large Reasoning Models”.

Applicants to this position will be eligible for a Global Talent Visa.

Please apply for the post at: https://www.bath.ac.uk/jobs/Vacancy.aspx?ref=AP12866

Detailed Description

This is an exciting opportunity for a highly skilled and motivated researcher with an interest in Natural Language Processing and Deep Learning to work on exploring the capabilities of state-of-the-art large language models (e.g., GPT-4o) and Large Reasoning Models (e.g., GPT-o1, Deepseek-R1).

You’ll join a fast-growing, ambitious team led by Professor Nello Cristianini, a leading AI expert whose work spans from SVMs to recent books on LLMs including Machina Sapiens and The Shortcut.

The selected candidate will be co-supervised by Dr Harish Tayyar Madabushi, whose work on LLM reasoning was included in the discussion paper on the Capabilities and Risks of Frontier AI, which was used as one of the foundational research works for discussions at the UK AI Safety Summit held at Bletchley Park.

The AI research group at the University of Bath offers the perfect opportunity for candidates interested in Large Language Models to work in an environment focused on developing their research and NLP skills and achieving high-impact publications at top NLP venues.

Technical Details

Large Language Models (LLMs) are fundamentally different from all previous forms of machine learning. Their ability to solve a range of tasks, often requiring advanced reasoning in humans, emerges primarily from next-token prediction training objectives. However, despite their now extensive deployment, the underlying principles governing these capabilities of LLMs remain poorly understood. Furthermore, their unpredictable failure modes, even in scenarios where they demonstrate remarkable performance, pose a significant concern.

There is some disagreement between researchers on exactly how LLMs function, with some believing that LLMs have AGI-like emergent intelligence and others ascribing their functioning to sophisticated template completion systems. Recent research at the University of Bath has been actively exploring these inherent capabilities and how variations to existing chain-of-thought methods can be used to improve reasoning in LLMs.

This project is aimed at accelerating this work and covers a range of capabilities and models including the very latest reasoning models that use reinforcement learning to train state of the art reasoning models such as GPT-o1 and Deepseek-R1.

Contact

Please contact Dr Harish Tayyar Madabushi for more information on this position at htm43@bath.ac.uk

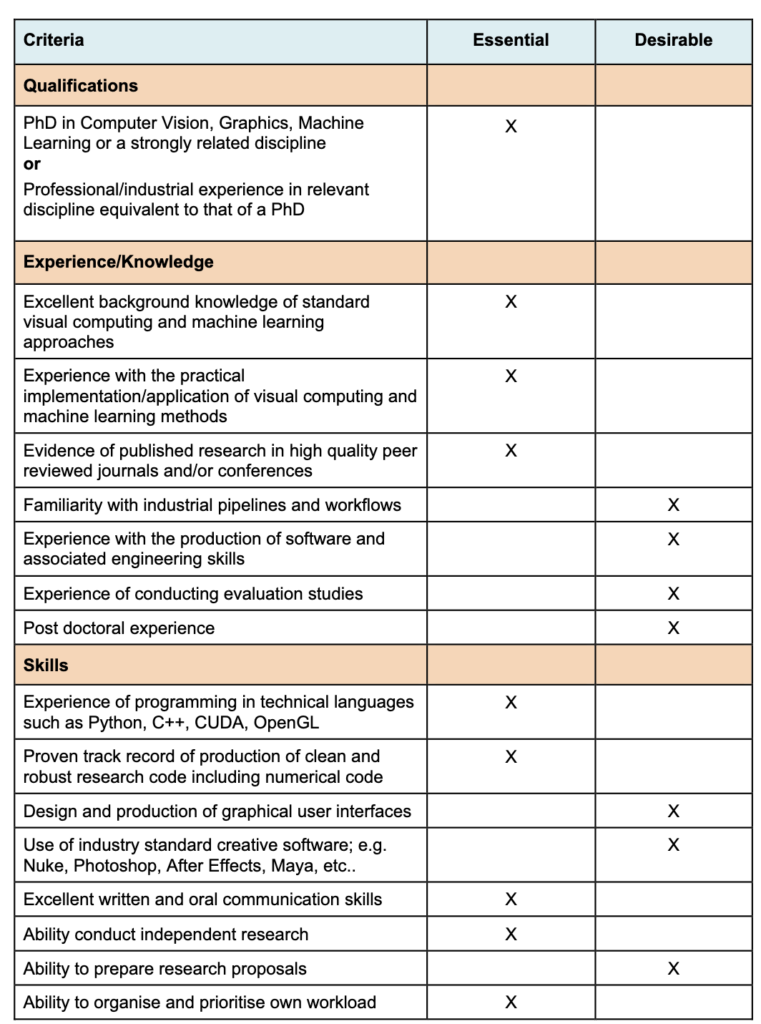

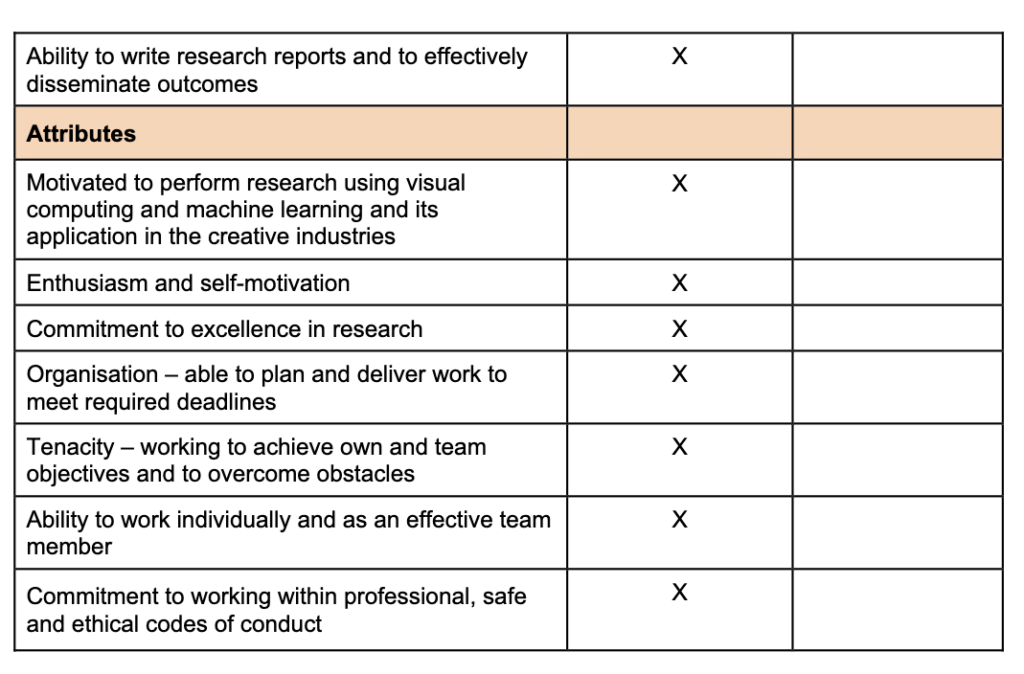

Person Specifications